AI Education Platform

Overview

A self-hosted, scalable platform for teaching and experimenting with artificial intelligence in controlled educational environments. The platform enables students and researchers to access isolated Jupyter-based development environments on GPU-backed infrastructure. Inspired by Google Colab, the system is tailored for on-premise GPU use, with full container control, secure web access, integrated chat, assignment delivery, and detailed system monitoring.

Designed and implemented by Alexander Kim in 2023–2024 for a national educational research project in Korea. The system supported over 40 concurrent users during live AI classes and successfully replaced the need for commercial cloud services.

Objectives

- Democratize access to GPU resources for students in a classroom/lab setting

- Provide isolated, reproducible, and secure environments for each user

- Enable real-time interaction, file sharing, and code collaboration

- Offer per-user monitoring of GPU and system usage

- Reduce cost compared to cloud-based alternatives like Google Colab or AWS

Core Features

🔹 Frontend

- Next.js-based Web UI: Clean, responsive interface with student-friendly layout

- GraphQL Client: Interactive communication with backend for notebooks, sessions, and metadata

- Socket.io Chat: Real-time communication for support, announcements, and Q&A

- Notebook Management: Create, restart, and delete containers from the frontend

- Authentication: Role-based access (admin/teacher/student)

🔹 Backend (Dual-Service Architecture)

- NestJS API Server:

- GraphQL API (user, session, notebook management)

- MongoDB integration for persistent user/session storage

- FastAPI Control Server:

- REST endpoints for Docker container lifecycle management

- GPU allocation logic (static MIG mapping per user)

- SSE/Prometheus-compatible exporters for monitoring

- Custom GPU scripts using

nvidia-smi,pynvml

🔹 Dockerized Learning Environments

- Jupyter Notebook + Terminal

- User-specific volumes (isolated home folders)

- Read-only shared volumes for distributing teaching materials

- Preinstalled packages (PyTorch, TensorFlow, Scikit-learn, etc.)

🔹 GPU Infrastructure

- Tesla V100 x4 with MIG slicing (static allocation)

- Each container mapped to a MIG slice (guaranteed isolation)

- Scripts to manage container ↔ MIG mapping

🔹 Monitoring

- Prometheus + Grafana Dashboards

- Custom GPU exporters (per MIG slice)

- Node Exporter for system-level metrics

- Dashboards for teachers: active users, GPU load, notebook status

🔹 Networking & Deployment

- Nginx reverse proxy with SSL termination

- Static IP and dynamic DNS (e.g., via DuckDNS)

- Self-hosted on bare metal (Ubuntu server)

- Docker Compose orchestration for all services

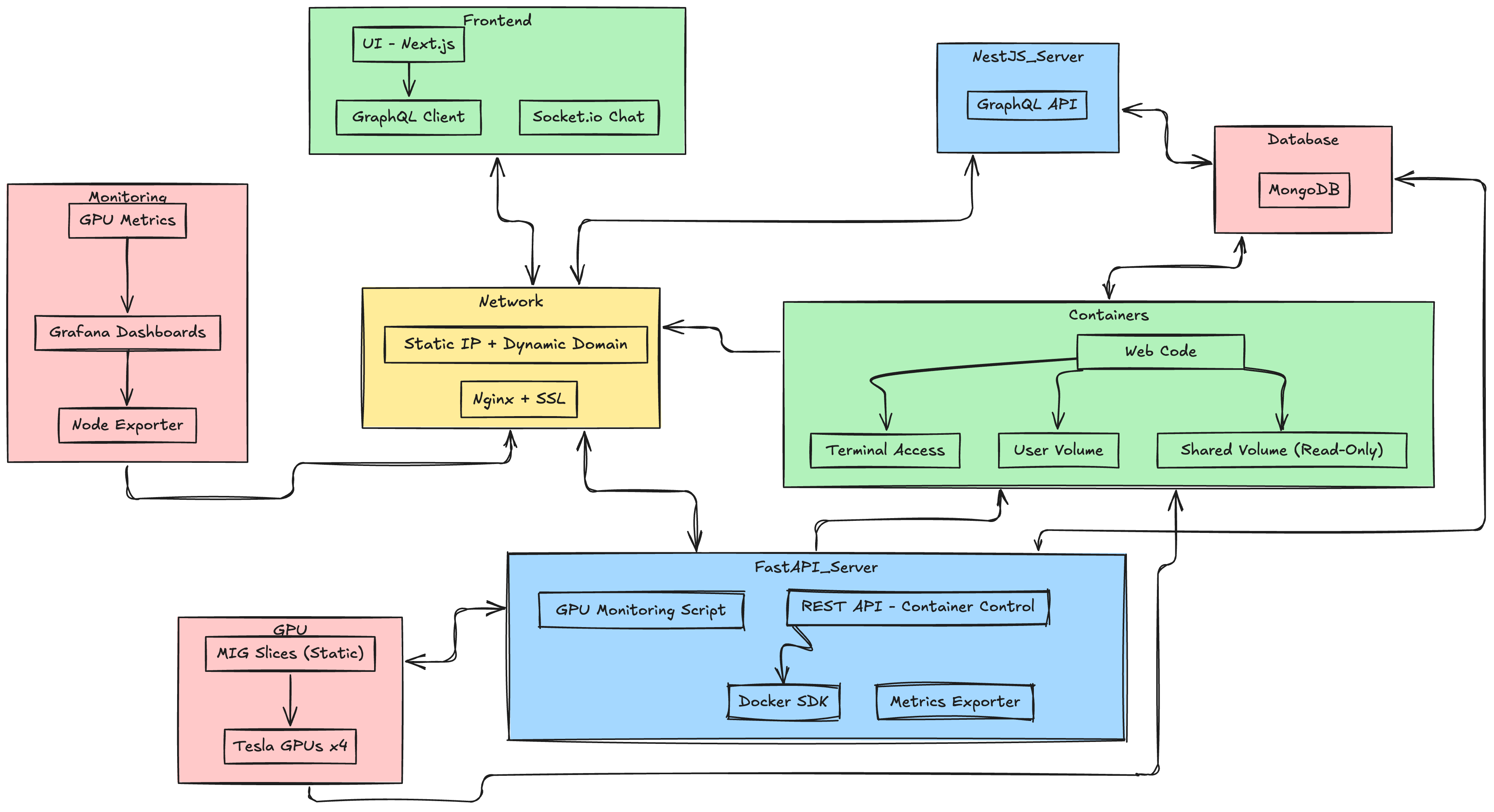

Architecture Diagram

Frontend: Next.js + GraphQL Client + Socket.IO

Backend 1 (NestJS): GraphQL API + MongoDB

Backend 2 (FastAPI): REST API + Docker SDK + GPU Metrics

Containers: Jupyter Notebook + Terminal + User Volumes

Monitoring: Prometheus + Node Exporter + Custom GPU Exporter + Grafana

Infrastructure: Tesla GPUs x4 + MIG slices + Nginx + Static IP + Docker Compose

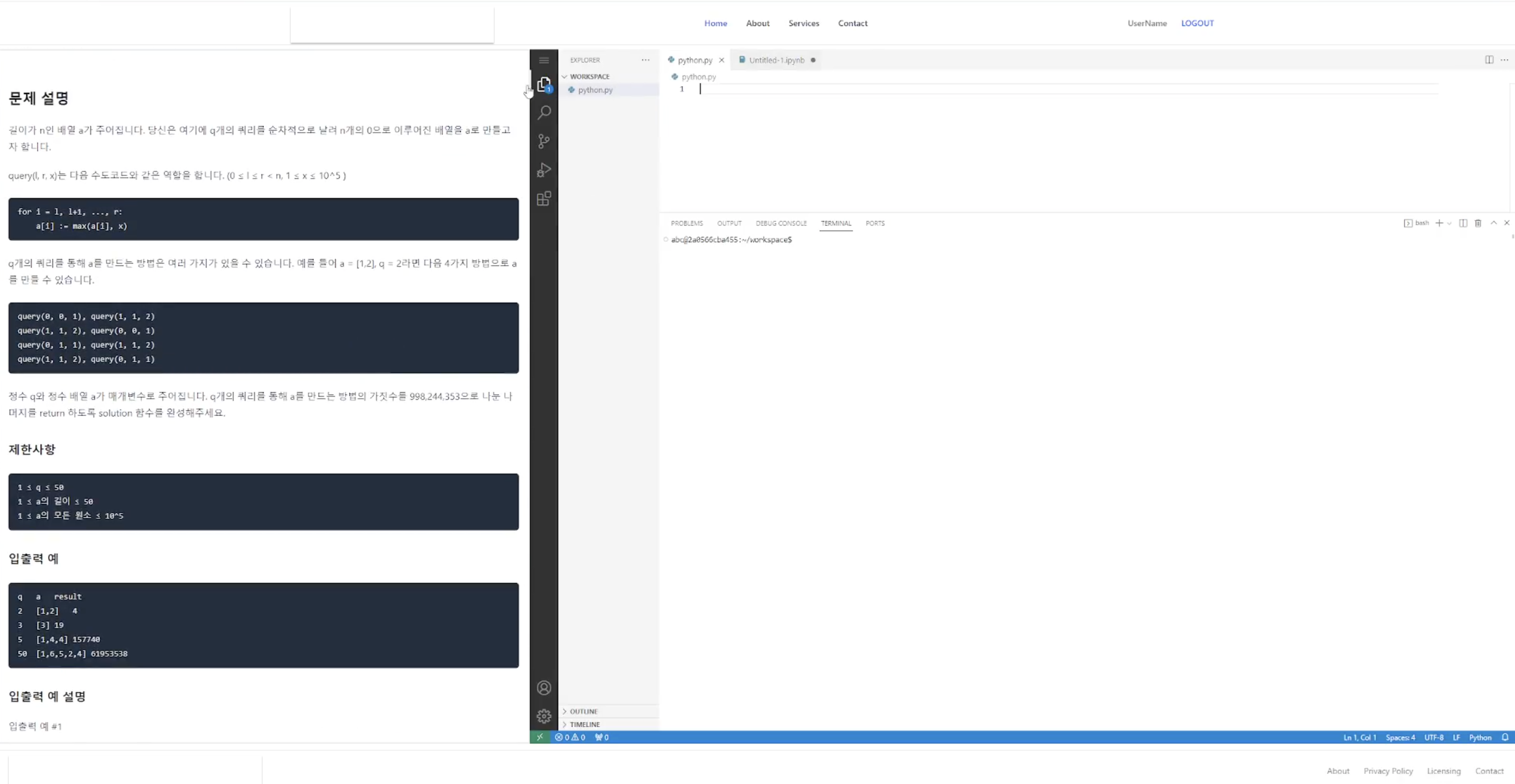

User Interface

Clean, responsive interface designed for educational use with student-friendly layout and intuitive navigation.

Use Cases

- University-level AI coursework (20–40 students)

- Workshops or bootcamps in deep learning

- Research labs requiring multi-user Jupyter access

- Safe alternative to commercial notebooks for schools with privacy/legal constraints

Key Technologies

| Layer | Technologies |

|---|---|

| Frontend | Next.js, TypeScript, Apollo Client, socket.io |

| Backend | NestJS (GraphQL), FastAPI (REST), MongoDB, Docker SDK |

| Monitoring | Prometheus, Grafana, Node Exporter, Custom GPU Exporters |

| GPU Tools | NVIDIA MIG, nvidia-smi, pynvml |

| Infra | Nginx, Docker, Docker Compose, SSL, Static IP + DDNS |

| DevOps | Docker Volumes, shell scripts, recovery automation |

Engineering Highlights

- Designed a dual-backend architecture to separate API logic from low-level GPU/container control

- Enabled multi-user isolation using MIG and Docker volumes

- Implemented robust fault-recovery and container health monitoring

- Built a chat layer with optional teacher moderation

- Used Prometheus exporters to trace GPU load per student in real time

- Integrated auto-mounting of teaching materials into student containers

Challenges Solved

- MIG management: Static allocation and mapping without breaking container health

- GPU resource fairness: One MIG slice per student, enforced via control server

- Monitoring granularity: Metrics by GPU slice, not global GPU usage

- Security: Nginx reverse proxy, role-based access, isolated volumes

- Uptime: Restart scripts and health checks for critical services

Future Plans

- Auto-grading via Jupyter nbconvert + test runners

- Admin dashboard for real-time control of sessions

- User activity logs and performance heatmaps

- OAuth login for LMS integration (Google Classroom, Moodle)

- Container auto-scaling based on load